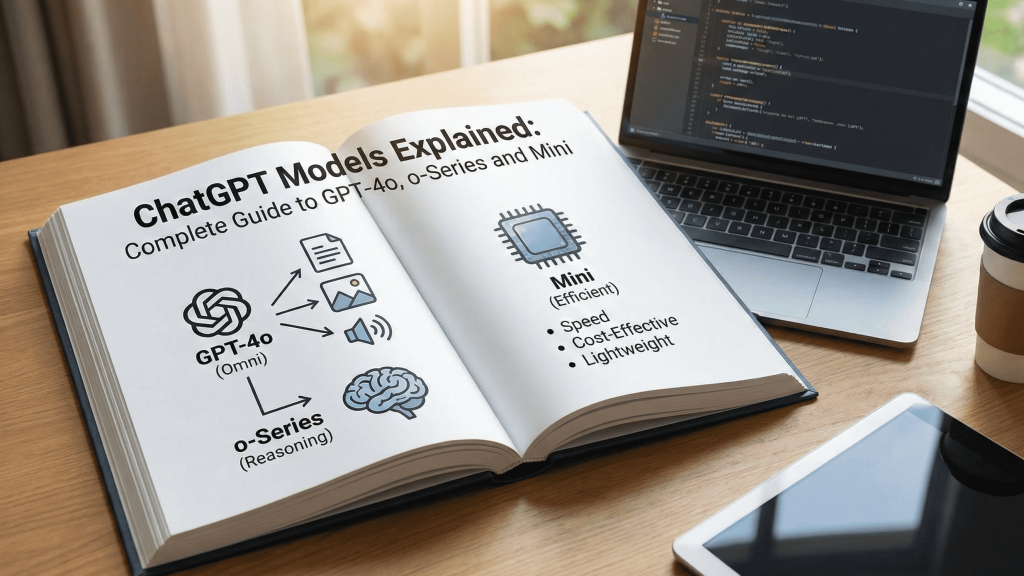

The ChatGPT family is no longer a single model. It is a lineup with different strengths, prices and use cases. There are general‑purpose models like GPT‑4o, deep‑thinking models like the o‑series, and lightweight fast models such as the mini variants. The goal of this pillar is to give you a clear mental map: what makes each model different, when to choose it, and how to combine them so you get maximum quality for the lowest reasonable cost.

1. Overview of the ChatGPT Model Family

According to OpenAI’s documentation and recent independent reports, ChatGPT models can be grouped into three broad families:

- General, multimodal models like GPT‑4o that handle text, images, audio and sometimes video.

- Deliberative “o‑series” models (such as o1 and o3‑mini) designed to think longer and solve hard math, science and coding problems.

- Mini or “light” models that keep much of the power but at lower price and higher speed, intended for high‑volume workloads.

2. GPT‑4o: The Default General‑Purpose Model

GPT‑4o is the default model for most users because it balances intelligence, speed, cost and multimodal abilities. Comparisons show it is strong at general conversation, content writing, many coding tasks and live web research through the built‑in Search feature, which lets it pull fresh information from the internet and cite sources. If you want one model for most day‑to‑day work, GPT‑4o is usually the right starting point.

3. The o‑Series: When You Need Deeper Reasoning

Models like o1 and o3‑mini are built to “think more” before answering. Instead of generating a response immediately, they run a long internal reasoning chain and only then produce the final answer. On difficult math, science and programming benchmarks, the o‑series clearly outperforms GPT‑4o, but it is slower and more expensive per request. That makes it ideal for high‑stakes technical problems, but overkill for simple Q&A, blog posts or casual coding help.

4. Mini Models: Speed and Cost Efficiency

Mini models such as GPT‑4.1 mini deliver a large portion of the flagship models’ capabilities at a much lower price and with faster responses. Performance data shows they are excellent for routine, high‑volume tasks like automated customer support, bulk document processing or background agents that run many calls per day. Text quality is slightly lower than GPT‑4o, but the difference is small for simple applications, while the cost savings can be substantial.

5. Building a Smart Usage Strategy

Instead of forcing one model to handle everything, it is more efficient to match models to task criticality:

- Use a mini model for simple, repetitive tasks at large scale.

- Use GPT‑4o for most everyday work where you want a balance of quality and price.

- Reserve the o‑series for truly hard problems where accuracy and deep reasoning matter more than speed or cost.

This way you benefit from each model’s strengths and keep your overall AI bill under control.