I’ll never forget the first time I generated an image with AI. It was early 2023, and I typed something ridiculously simple into Midjourney—”a cat wearing a space helmet on Mars”—fully expecting a blurry, distorted mess. What I got back stopped me mid-scroll. The image wasn’t just recognizable. It was beautiful. The lighting was cinematic, the details were crisp, and honestly, it looked like something a professional digital artist might have spent hours creating.

That moment changed everything for me. Not because the technology was perfect (it wasn’t), but because I realized we’d crossed a threshold. Creating professional-quality images was no longer locked behind years of design training or expensive software licenses. Anyone with an idea and an internet connection could now bring visual concepts to life in seconds.

Fast forward to 2026, and the AI image generation landscape has evolved beyond what most of us imagined possible just a few years ago. We’re not talking about generating quirky novelty images anymore—though you can certainly still do that. We’re talking about tools that professional designers, marketers, filmmakers, and businesses use daily to create everything from product photography to concept art to marketing campaigns.

The numbers tell part of the story. According to recent industry data, Stable Diffusion alone commanded 80% of the AI-generated image market as of 2024, producing 12.59 billion images. Midjourney reached over 10 million active users by mid-2026, with daily image generations exceeding 500 million. The AI image generator market itself reached $418.5 million in 2024 and projects expansion to $60.8 billion by 2030—a staggering growth rate that reflects how quickly this technology has moved from experimental to essential.

But statistics only capture part of what’s happening. The real transformation is in how these tools have democratized visual creativity. A small business owner can now generate professional product photos without hiring a photographer. A writer can create book cover concepts in minutes instead of weeks. A marketing team can test dozens of ad creative variations before spending a dime on production.

This isn’t just about convenience or cost savings, though those matter enormously. It’s about removing the barriers between imagination and creation. When you can iterate on visual ideas as quickly as you can think them, creativity itself changes. You become bolder, more experimental, willing to try things you never would have attempted when each attempt required hours of work or hundreds of dollars.

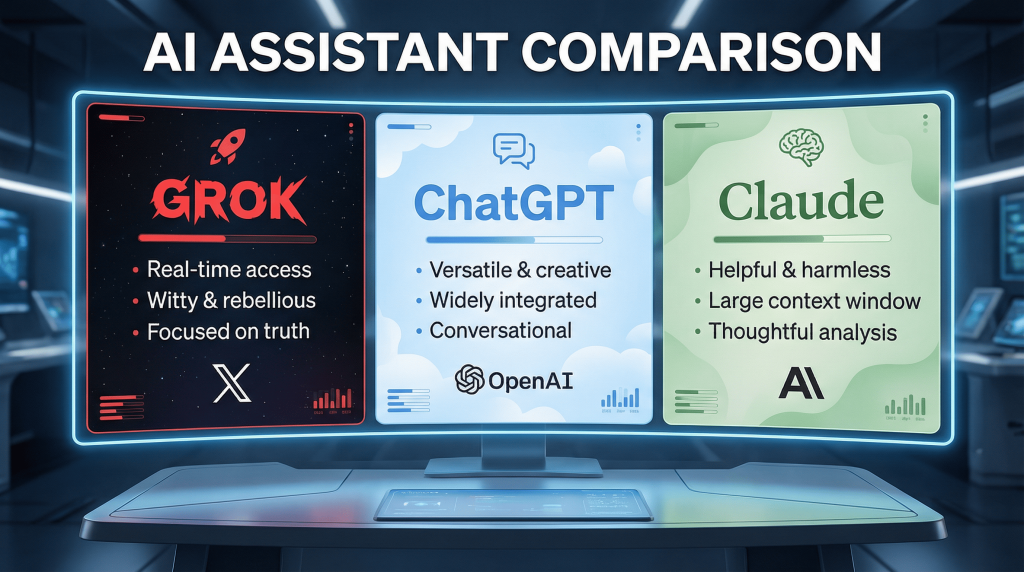

That said, the AI image generation space in 2026 is complex. There are at least a dozen major platforms, each with different strengths, pricing models, and philosophies. Midjourney excels at artistic, high-end visuals but requires navigating Discord. DALL-E integrates seamlessly with ChatGPT for conversational image creation but has limitations around customization. Stable Diffusion offers unparalleled control and a thriving open-source community but comes with a steeper learning curve.

This guide cuts through that complexity. Whether you’re a complete beginner wondering which tool to try first, a professional designer evaluating AI for your workflow, or a business leader trying to understand what’s actually possible with these technologies, you’ll find what you need here. We’ll explore how these tools actually work, compare the major platforms honestly (including their limitations), dive deep into practical use cases, and address the thorny questions around copyright and ethics that anyone serious about using AI images needs to understand.

Understanding How AI Image Generation Actually Works

Before we dive into specific tools and techniques, it helps to understand what’s actually happening under the hood when you type a prompt and an AI generates an image. You don’t need a Ph.D. in machine learning to use these tools effectively, but having a basic mental model helps you get better results and understand why things work the way they do.

At their core, modern AI image generators use something called “diffusion models.” The name sounds technical, but the concept is surprisingly intuitive once you see it explained. Imagine you have a photograph, and you gradually add more and more random noise to it—static, like an old TV losing its signal—until the original image is completely unrecognizable, just visual chaos. A diffusion model learns to reverse that process. It starts with pure noise and gradually removes it, guided by your text prompt, until a clear image emerges.

The training process is what makes this possible. These models are trained on millions or billions of image-text pairs scraped from the internet. During training, the model learns associations between words and visual patterns. It learns that “sunset” often involves orange and pink gradients near horizons, that “cat” involves certain shapes and fur patterns, that “cyberpunk” tends to include neon lights and futuristic cityscapes. The model doesn’t “understand” these concepts the way humans do, but it learns incredibly sophisticated statistical relationships between language and visual elements.

What makes 2026’s models particularly impressive is their scale and sophistication. When OpenAI released the original DALL-E in 2021, it used a 12-billion parameter model. That sounds massive, but current state-of-the-art models use far more complex architectures. Stable Diffusion 3.5 Large utilizes 8.1 billion parameters with Multimodal Diffusion Transformer architecture, while Midjourney V7 was released on April 3, 2025 with significant improvements in prompt understanding and image quality.

These aren’t just bigger models—they’re smarter about how they process information. Midjourney’s latest Style Creator updates emphasize open-ended exploration, with features like mood boards, bookmarking, and adaptive preference learning that help artists discover and refine unique styles. The platform has shifted from rigid, outcome-driven generation to something more fluid and collaborative.

The way these models understand text has also evolved dramatically. Early image generators struggled with complex prompts. If you asked for “a red ball on a blue table,” you might get a blue ball on a red table, or everything red, or complete chaos. Current models handle multi-object scenes, spatial relationships, and detailed stylistic instructions with remarkable accuracy. DALL-E 3’s integration with ChatGPT means you can have a conversation to refine your prompt, effectively teaching the AI what you want through iteration rather than trying to craft the perfect prompt on the first try.

One crucial thing to understand: these models don’t “search” for images or composite existing photos. They genuinely create new images pixel by pixel. When you generate “a steampunk elephant playing chess in Paris,” the model isn’t finding pictures of elephants and chess boards and Paris and mashing them together. It’s synthesizing an entirely new image based on its learned understanding of what those concepts look like and how they relate spatially and stylistically.

This matters for several reasons. First, it means you can create images of things that have never been photographed or drawn. Want to see what a medieval castle would look like on Mars? An AI can generate that because it understands both castles and Martian landscapes and can combine them in novel ways. Second, it explains why these tools can match specific artistic styles—they’ve learned the visual patterns that define impressionism, anime, photorealism, or any other style represented in their training data.

The process isn’t perfect, of course. AI image generators still struggle with certain things. Hands remain notoriously difficult, though this has improved dramatically with recent models. Text rendering was nearly impossible in early versions but is now quite reliable in tools like DALL-E 3 and newer Midjourney iterations. Complex scenes with many interacting subjects can still produce weird results if your prompt isn’t specific enough.

Understanding these fundamentals helps you become a better prompter. You learn to work with the model’s strengths, provide enough specificity to guide generation without over-constraining creativity, and recognize when you’re asking for something that’s at the edge of current capabilities. It’s the difference between fighting against the tool and collaborating with it.

For deeper exploration of specific platforms and how to get the most out of each one, check out our specialized guides: Complete Midjourney Guide for 2026, DALL-E 3: Everything You Need to Know, and Stable Diffusion vs Commercial Tools.

The Major Players: Midjourney, DALL-E, and Stable Diffusion

The AI image generation space has consolidated around three major platforms, each with distinct philosophies, strengths, and use cases. Understanding what makes each unique helps you choose the right tool for your specific needs—and often, the answer is using multiple tools for different purposes.

Midjourney: The Artist’s Choice

Midjourney has become synonymous with “AI art” for good reason. If you’ve seen jaw-dropping AI-generated images shared on Twitter or Reddit, there’s a solid chance they came from Midjourney. The platform has carved out a unique position focused squarely on aesthetic quality and artistic expression.

What sets Midjourney apart is its consistent ability to produce images that feel intentional, composed, and often stunning. The platform seems to have an innate sense of what makes images visually appealing—composition, lighting, color harmony, artistic cohesion. Even relatively simple prompts tend to produce results that look like they came from a skilled digital artist rather than a computer algorithm.

Version 7, released on April 3, 2025 and made default on June 17, 2025, brought significant improvements to the platform. Midjourney V8 is looking like a January 2026 release, with new features including a new dataset, switching from TPUs to GPUs and PyTorch, new UI, different oref system, style creator, new draft mode and more.

The platform’s recent innovations around style creation and character consistency are particularly impressive. The V7 model’s omni reference (oref) feature enables consistent characters and objects across generations, while Draft Mode allows 10x faster generation for rapid iteration. This addresses one of the historical pain points of AI image generation—being able to maintain consistency across multiple images for projects like comic books, storyboards, or branding materials.

Using Midjourney requires working through Discord, which is both a strength and a weakness. It creates a unique community atmosphere where you can see what others are creating, get inspired, and learn from shared prompts. But it’s also less polished than traditional software interfaces, and can feel chaotic for new users who aren’t familiar with Discord’s chat-based approach.

The platform uses a subscription model with no free tier. Plans start at around $10 monthly for basic access, scaling up to $60+ for professional use with features like “Stealth Mode” that keeps your generations private. For serious users creating hundreds of images monthly, this pricing is reasonable. For casual experimentation, the lack of a free option is a barrier.

Midjourney excels at: artistic and illustrative styles, cinematic and atmospheric images, character designs and concept art, images where aesthetic quality matters more than photographic accuracy, and creative exploration where you want the AI to surprise you with beautiful interpretations.

Midjourney struggles with: precise control over exact details, generating images with specific text or logos, photorealistic accuracy (it often adds artistic flair even when you don’t want it), technical diagrams or infographics, and anything requiring extensive post-generation editing.

If you’re an artist, designer, or creative professional whose primary goal is creating visually striking images that capture mood and style, Midjourney is likely your best choice. Our Complete Midjourney Guide covers everything from prompt engineering to advanced features like style references and character consistency.

DALL-E 3: The Accessible Powerhouse

OpenAI’s DALL-E 3 takes a fundamentally different approach. Rather than requiring users to master prompt engineering or navigate specialized interfaces, DALL-E prioritizes accessibility and ease of use. Integration with ChatGPT Plus means you can literally have a conversation about the image you want, with the AI helping refine and improve your concept before generating anything.

This conversational approach is surprisingly powerful. Instead of staring at a blank prompt box trying to craft the perfect description, you can start with something vague like “I need an image for a blog post about productivity” and ChatGPT will ask clarifying questions, suggest directions, and eventually generate prompts that DALL-E uses to create images. For users intimidated by the blank-canvas problem, this guided experience is invaluable.

The technical capabilities are robust. DALL-E 3 introduced automatic prompt rewriting, where GPT-4 optimizes all prompts before they’re passed to DALL-E, using very detailed prompts that give significantly better results. This means even relatively casual descriptions produce well-composed, detailed images because the system intelligently expands your prompt behind the scenes.

One area where DALL-E particularly shines is text rendering. Early AI image generators couldn’t handle text at all—if you asked for an image with words, you’d get nonsensical letter-like shapes. DALL-E 3 reliably generates readable text in images, which is crucial for things like poster designs, social media graphics, or any visual that needs to include actual words.

The platform offers two style modes: natural and vivid. The natural style produces more subdued, realistic images similar to DALL-E 2’s aesthetic. The vivid style, which is the default in ChatGPT, creates more dramatic, cinematic, hyper-real results. The natural style is specifically useful in cases where DALL-E-3 over-exaggerates or confuses a subject that’s supposed to be more simple, subdued, or realistic.

DALL-E has some noteworthy limitations. It won’t generate images of public figures by name, has restrictions on generating images in the style of living artists, and includes various safety filters. These restrictions are more aggressive than competitors, which some users find frustrating but others appreciate as responsible AI deployment. OpenAI announced deprecation of DALL-E-3 model snapshots from the API on May 12, 2026, though the functionality remains available through ChatGPT.

The pricing structure is straightforward if you’re a ChatGPT Plus subscriber ($20/month), which gives you access to DALL-E 3 along with GPT-4 and other features. If you only want image generation, this might feel expensive compared to alternatives. Developers can also access DALL-E through OpenAI’s API, paying per image generated.

DALL-E excels at: quick ideation and concept development, images that need readable text, working conversationally to refine ideas, photorealistic product photography or marketing imagery, and users who want good results without learning complex prompting techniques.

DALL-E struggles with: very specific artistic styles or aesthetics, the level of fine-grained control power users want, generating images of public figures or in styles of living artists, and use cases requiring hundreds of iterations (the conversational interface becomes less efficient at scale).

For most casual users, content creators, and businesses that need quality images without investing in learning specialized tools, DALL-E represents the easiest entry point. Our comprehensive DALL-E guide explores advanced features, prompt strategies, and integration options.

Stable Diffusion: The Open-Source Powerhouse

Stable Diffusion occupies a unique position in the AI image generation space. Unlike Midjourney and DALL-E, which are commercial services you access through their interfaces, Stable Diffusion is open-source software you can download and run yourself. This fundamental difference creates both enormous opportunities and significant complexity.

The open-source nature has spawned an incredible ecosystem. Custom-trained models exceeded 250,000 cumulative releases by 2024, representing fine-tuned versions optimized for specific styles, subjects, or use cases. Platforms like Civitai and Hugging Face host thousands of these custom models, each trained for specific purposes—anime-style generation, photorealistic portraits, architectural renderings, you name it.

This ecosystem is Stable Diffusion’s greatest strength. Want to generate images in a specific artist’s style? There’s probably a model for that. Need perfect anatomy for medical illustrations? There’s a specialized model. Want anime characters with a particular aesthetic? The community has created dozens of options. This level of specialization and customization simply doesn’t exist for closed platforms.

The latest official release, Stable Diffusion 3.5 launched in October 2024, introducing an 8.1 billion-parameter Large model optimized for enterprise deployment and a 2.5 billion-parameter Medium variant designed for consumer hardware. The Medium variant is particularly significant because it enables deployment on consumer-grade hardware, making powerful image generation accessible without expensive GPUs.

Running Stable Diffusion yourself gives you complete control. No content restrictions beyond local laws. No monthly subscription fees. No rate limits on generation. You own your workflow entirely. For professionals generating thousands of images, this economic model becomes compelling quickly. For businesses with data privacy concerns, keeping image generation on-premises eliminates compliance worries about sending prompts and concepts to third-party services.

The tradeoff is complexity. Self-hosting requires technical comfort with things like Python environments, CUDA drivers, and GPU configurations. The most popular community interface, AUTOMATIC1111’s WebUI, is powerful but presents users with dozens of settings and options that can overwhelm beginners. There’s a real learning curve to understand sampling methods, CFG scales, model weights, and the other technical parameters that affect generation.

For users who don’t want to self-host, several services provide Stable Diffusion access through web interfaces. The official DreamStudio by Stability AI offers a polished experience with the latest models. Other platforms like NightCafe, Leonardo.ai, and Playground AI provide varying takes on making Stable Diffusion accessible through browser interfaces.

The legal and licensing situation with Stable Diffusion is more complex than competitors. Stable Diffusion 3.5 applies the permissive Stability AI Community License, while commercial enterprises with revenue exceeding one million dollars need the Stability AI Enterprise License. Earlier versions used the CreativeML OpenRAIL-M license, which allowed broad use with some content restrictions.

Stable Diffusion excels at: maximum control and customization, specialized use cases with custom-trained models, workflows requiring hundreds or thousands of images, data privacy and on-premises processing, and users willing to invest time learning for long-term benefits.

Stable Diffusion struggles with: ease of use for non-technical users, out-of-the-box results compared to commercial alternatives, consistent quality without understanding technical parameters, and the time investment required to become proficient.

The platform attracts a different user base than Midjourney or DALL-E—people who value control and customization over convenience, who have specific technical needs, or who generate images at a scale where ownership economics matter. Our detailed comparison of Stable Diffusion versus commercial tools explores these tradeoffs in depth.

Practical Applications: What You Can Actually Do With AI Image Generation

The technology is impressive, but what matters for most people is practical application. How are businesses, creators, and professionals actually using AI image generation in 2026? Let’s explore real-world use cases that have proven effective, along with specific strategies that work.

Content Creation and Marketing

This is probably the most widespread commercial application. Creating visual assets for blogs, social media, ads, and marketing materials used to require either design skills, expensive stock photo subscriptions, or hiring photographers and designers. AI image generation has dramatically lowered these barriers.

Blog and article imagery is the simplest use case. Every blog post needs a featured image. AI lets you create custom imagery that actually relates to your content rather than settling for generic stock photos. The images feel more intentional and can reinforce your article’s message visually. A post about productivity strategies can feature an AI-generated image specifically showing someone organizing their day, not just another random desk photo used by thousands of other sites.

Social media content creation has been transformed. Creating consistent, on-brand imagery for Instagram, Twitter, LinkedIn, or TikTok used to require serious design work. Now, marketers generate dozens of variations quickly, test what resonates, and iterate rapidly. The ability to experiment freely changes creative processes—you become willing to try bold, unusual approaches because the cost of failure is seconds, not hours.

Ad creative testing exemplifies the power of rapid iteration. Traditional ad production requires significant upfront investment—you create a few variations, run them, hope something works. AI generation flips this model. Create fifty different visual approaches, run small tests, identify winners, then invest in polishing the successful concepts. This test-and-learn approach dramatically improves campaign effectiveness.

Product visualization and e-commerce imagery represents a particularly compelling use case. Generating lifestyle shots showing products in different settings, creating multiple angles and lighting conditions, producing seasonal or themed product photos—all without physical photography. This isn’t about replacing professional product photography for primary listings, but about generating supplementary imagery that would otherwise be cost-prohibitive.

One retail client I work with generates hundreds of product lifestyle images monthly using AI. Their main product photos are still professional studio shots, but they use AI to create contextual imagery showing products in use, seasonal promotional graphics, and social media content. This increased their visual content output by an order of magnitude without proportional budget increases.

Creative Work and Design

For artists, illustrators, and designers, AI image generation serves different roles depending on perspective and workflow. Some see it as a threat to their livelihoods. Others view it as a powerful new tool that enhances rather than replaces human creativity. The reality is nuanced and still evolving.

Concept development and ideation is where many professional creatives find genuine value. Generating dozens of quick concepts to explore different directions, visualizing ideas that are expensive or impossible to mock up traditionally, using AI outputs as reference material or inspiration—these workflows integrate AI as a collaborator rather than replacement.

One illustrator friend described her process: she uses Midjourney to generate 20-30 conceptual variations quickly, identifies the most promising directions, then creates the final illustration by hand using the AI outputs as reference. The AI handles the initial brainstorming and exploration phase that would previously involve hours of rough sketching. She still does all the final artistic work, but the process is more efficient and explores wider creative territory.

Storyboarding and pre-visualization for film, video, and animation have been revolutionized. Creating storyboards traditionally required hiring storyboard artists or spending hours creating rough visualizations. AI generation lets directors and producers visualize scenes quickly, experiment with framing and composition, and communicate vision to production teams before spending money on actual shooting or animation.

Character design for games, comics, and creative projects benefits enormously from AI’s ability to generate variations. Need to see what a character concept looks like with different costumes, expressions, or in various poses? Generate dozens of variations, identify what works, refine from there. The iteration speed transforms character development workflows.

Texture and asset generation for 3D work, game development, and VFX provides practical utility that’s less controversial. Generating seamless textures, creating reference images for 3D modeling, producing background assets—these use cases position AI as a productivity tool for technical creative work rather than replacing artistic expression.

Business and Professional Applications

Beyond creative and marketing work, AI image generation solves specific business problems across various industries.

Presentation design and slide decks become more visually engaging when you can generate custom imagery rather than relying on overused stock photos or clipart. Creating diagrams, illustrations of concepts, or thematic imagery that reinforces your message—all customizable to your specific needs and brand aesthetic.

Documentation and training materials benefit from custom imagery. Technical documentation showing specific equipment or processes, training materials with illustrations tailored to your exact procedures, educational content with visuals that match your curriculum—these applications value relevance and customization over artistic flair.

Mockups and prototypes for product development let businesses visualize concepts before investing in physical prototypes. What could packaging look like? How might different color schemes work? What if we changed this design element? Rapid visual iteration in early stages reduces costly mistakes later in development.

Real estate and architectural visualization is being transformed. Generating images showing how properties could look with different finishes, furniture, or landscaping. Creating visualizations of architectural concepts or renovation plans. Producing marketing materials showing properties in different seasons or lighting conditions. This doesn’t replace professional architectural renderings for serious projects, but provides accessible options for smaller-scale work.

Interior design consultation and virtual staging uses AI to show clients possibilities. What would this room look like with different color schemes? How about various furniture arrangements? Generating these visualizations quickly and affordably makes design consultation more accessible.

Content and Media Industries

Publishing, journalism, and media companies face unique challenges around visual content that AI helps address.

Book cover design, particularly for self-published authors, has been democratized. Creating professional-looking covers without hiring designers makes self-publishing more viable. The results may not match the quality of professional design work (which involves typography, layout, and conceptual thinking beyond just imagery), but they’re dramatically better than amateur attempts.

Editorial illustrations for magazines, newspapers, and online publications provide quick, custom imagery for articles. The news cycle moves fast, and generating relevant imagery quickly has real value when time matters more than absolute artistic perfection.

Visual storytelling and journalism benefits from the ability to illustrate events, visualize data, or create explanatory graphics quickly. This doesn’t replace photojournalism or documentary photography—those capture reality in ways AI cannot and should not attempt. But for explainers, visualizations, and conceptual illustration, AI provides new options.

Crafting Effective Prompts: The Art and Science of Getting What You Want

Understanding how to communicate with AI image generators effectively makes the difference between frustration and consistent results. Prompt engineering isn’t about memorizing magic formulas—it’s about understanding what information the AI needs and how to provide it clearly.

The fundamental structure of effective prompts includes several key components. Start with the subject—what is the image primarily about? “A golden retriever,” “a futuristic cityscape,” “a bowl of ramen.” Be specific about important details while leaving room for interpretation where you want the AI to be creative.

Style and aesthetic guidance shapes how the subject appears. Are you going for photorealism, illustration, oil painting, watercolor, anime, cyberpunk, minimalist, or any of hundreds of other visual styles? Different platforms respond to style keywords differently, but broadly speaking, being explicit about style produces more consistent results than hoping the AI intuits your preferences.

Composition and framing details help control how the image is structured. “Close-up portrait,” “wide-angle landscape,” “bird’s eye view,” “symmetrical composition”—these terms guide spatial organization. Photography terms like “shallow depth of field” or “wide aperture” can produce specific visual effects even though the AI isn’t actually using a camera.

Lighting and atmosphere dramatically affect mood and visual impact. “Golden hour lighting,” “dramatic shadows,” “soft diffused light,” “neon lighting,” “candlelit,” “overcast natural light”—describing lighting conditions helps the AI capture the atmosphere you envision.

Technical parameters matter for certain use cases. Mentioning camera equipment, rendering engines, or quality indicators can push results in specific directions. “Shot on ARRI Alexa,” “Unreal Engine 5 render,” “8k resolution,” “highly detailed”—while the AI isn’t actually using these tools, it has learned associations between these terms and certain visual qualities.

The negative prompt technique, available in many tools, specifies what you don’t want. If faces keep appearing when you want landscapes, add “people, faces” to negative prompts. If colors are too saturated, add “oversaturated” to the negative list. This gives you finer control by eliminating unwanted elements.

Different platforms respond to prompts differently, which matters when you’re working across multiple tools. Midjourney tends to add artistic flair even to simple prompts, so sometimes you need to be more direct about wanting photorealism if that’s your goal. DALL-E takes prompts more literally and benefits from conversational refinement through ChatGPT. Stable Diffusion’s response depends heavily on which specific model you’re using, with different models trained on different data and responding differently to the same keywords.

The prompt building process often works best iteratively. Start simple, generate an image, identify what works and what doesn’t, then refine. Add more specific details about the elements that matter. Use negative prompts to eliminate recurring problems. Gradually zero in on the visual you want rather than trying to craft the perfect prompt from scratch.

A concrete example helps illustrate this process. Suppose you want an image for a blog post about coffee shops. Your first prompt might be: “a coffee shop.” This produces something generic. Next iteration: “a cozy independent coffee shop, warm lighting, customers working on laptops.” Better, but maybe it looks too modern when you want something more vintage. Third iteration: “a cozy vintage coffee shop, warm edison bulb lighting, wooden furniture, customers working on laptops, plants, books on shelves, photorealistic.” Now you’re getting closer to a specific vision rather than a generic coffee shop photo.

Learning from others accelerates your prompt skills. Many creators share their prompts alongside generated images. Studying what prompts produce impressive results teaches you patterns, effective keywords, and structural approaches. Communities around Midjourney, Stable Diffusion, and other platforms are goldmines of shared knowledge.

Common mistakes to avoid include over-prompting (adding so many details that the AI gets confused and produces chaos), under-prompting (being too vague and getting generic results), conflicting instructions (asking for things that contradict each other, like “minimalist” and “highly detailed”), and prompt fatigue (using the exact same prompt structure for every image when experimenting with different approaches might produce better results).

The meta-skill is learning to think like the AI does—in terms of visual concepts, styles, and compositional elements rather than high-level abstract ideas. When you want an image that evokes “freedom,” translate that abstract concept into concrete visual elements: open spaces, bright skies, birds in flight, expansive landscapes. The AI understands visual patterns, not abstract concepts, so your job is translation.

Navigating the Legal and Ethical Minefield

We can’t discuss AI image generation honestly without addressing the uncomfortable questions about copyright, attribution, ethics, and the impact on creative professionals. This is messy territory where legal frameworks haven’t caught up with technology, ethical norms are contested, and strong feelings run in multiple directions.

The copyright question cuts in several directions. First, who owns AI-generated images? In the United States, current interpretation suggests that works created entirely by AI without human creative input aren’t copyrightable—they lack the human authorship required for copyright protection. However, images created through significant human creative direction using AI as a tool likely are copyrightable, though this remains legally untested. Different jurisdictions have different approaches, adding complexity for international use.

The more contentious issue is training data. Most AI image generators were trained on billions of images scraped from the internet—artwork, photographs, illustrations—without explicit permission from creators. Artists understandably object to their work being used to train systems that can then generate images “in the style of” their distinctive aesthetic. Several major lawsuits are working through courts as of 2026, and outcomes will significantly impact how these systems can operate legally.

Stability AI, creators of Stable Diffusion, has faced multiple lawsuits including a U.S. district court case where Judge William Orrick III rejected some claims but allowed artist Sarah Andersen to pursue her main claim that use of her work to train Stable Diffusion infringes her copyright. These cases are ongoing and will likely set important precedents.

Different platforms have responded differently to these concerns. DALL-E won’t generate images “in the style of” living artists by name, and creators can opt their images out from training future models. Midjourney faced criticism for their training approach but has worked to address concerns. Stable Diffusion’s open-source nature makes policing more difficult—even if official models remove certain training data, community versions might not.

The ethical considerations extend beyond legal questions. Is it ethical to generate images that mimic distinctive artistic styles without compensation to those artists? What about generating images for commercial use that compete with working photographers and illustrators? When does using AI as a tool become replacing human creative work?

These questions don’t have universal answers. A professional illustrator whose distinctive style gets copied by AI models understandably feels differently than a small business owner who can finally afford custom imagery for their website. The ethics depend on context, intent, and impact.

Some ethical guidelines that seem reasonable regardless of perspective: being transparent about AI-generated content, not attempting to pass off AI generations as human-created work in contexts where that distinction matters, respecting platform terms of service around attribution and use restrictions, and acknowledging the creative debt owed to artists whose work trained these systems.

For professional use, the legal considerations become more serious. If you’re generating images for commercial purposes, understand the licensing terms of your chosen platform. Most grant commercial usage rights to paid subscribers, but restrictions vary. Using generated images in situations where provenance matters—expert testimony, journalism, legal documents—raises additional concerns about authenticity and reliability.

The impact on creative professionals is real and varied. Some creative jobs have undoubtedly been affected—stock photography, generic illustration work, and entry-level design positions face new competition from AI tools. At the same time, new opportunities have emerged for creators who adapt these tools, for art directors who can now iterate concepts faster, and for businesses that can afford visual content creation for the first time.

The longer-term trajectory is uncertain. Will AI image generation replace significant portions of creative work, or will it become another tool that professionals integrate into their workflows? The answer is probably “both, depending on the specific type of creative work.” The impact looks different for fine artists than commercial illustrators, for photographers than graphic designers.

What seems clear is that AI image generation isn’t disappearing. The technology works, it’s economically compelling for many use cases, and it’s becoming integrated into standard creative workflows. The relevant questions aren’t whether to use these tools but how to use them responsibly, how to evolve legal frameworks to balance innovation with creator rights, and how creative professionals adapt to this changed landscape.

For detailed discussion of these complex issues, including current legal status and best practices for ethical use, see our in-depth article on legal and copyright issues with AI-generated art.

Looking Ahead: What’s Next for AI Image Generation

Standing in 2026, it’s worth considering where this technology is headed. The rapid evolution over the past few years shows no signs of slowing, and several clear trends are emerging that will shape the next generation of tools.

Video generation is the obvious next frontier. Converting text to static images is impressive, but text-to-video adds a dimension of complexity that’s proved challenging.