Meta Description: Learn how regression and classification algorithms work and when to use them.

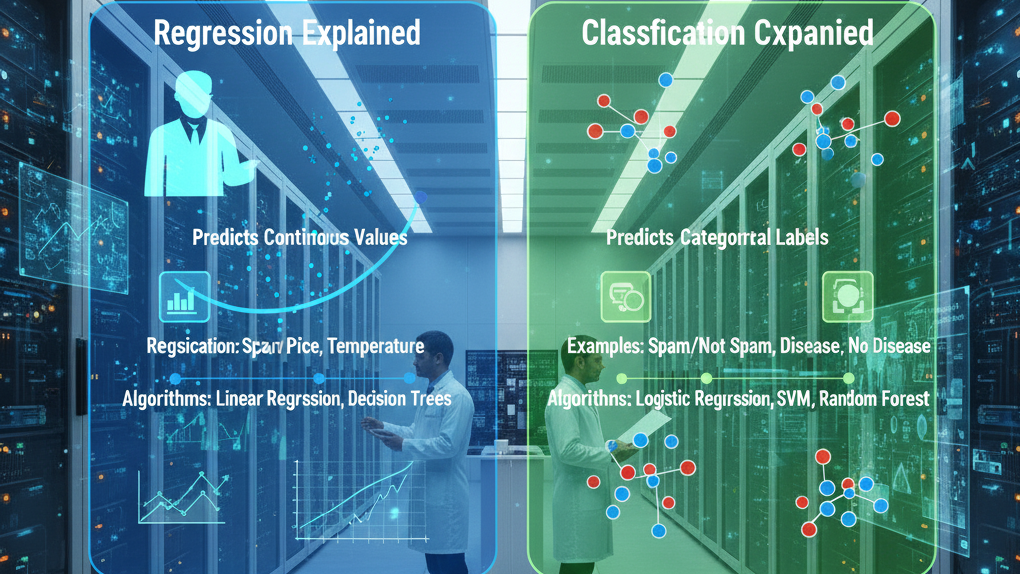

Regression and Classification are the two pillars of supervised learning. While both are predictive models that learn from labeled data, they address different types of problems. Mastering both is essential for any Machine Learning practitioner.

Understanding Regression

Regression is used when you want to predict continuous numerical values. Continuous means values can fall anywhere on a spectrum, not just discrete categories.

How Regression Works

Imagine predicting house prices. Given features like square footage, bedrooms, and location, you want to estimate a price. The price could be 250,000 or 350,500 or any value in between, not just “cheap” or “expensive.”

Regression algorithms learn the underlying relationship between input features and continuous output values. The simplest form, Linear Regression, fits a line through data points to make predictions.

Real-World Regression Applications

Financial Forecasting predicts stock prices or market trends. Real Estate estimates property values. Healthcare predicts patient recovery times. Energy Consumption forecasts power usage. Salary Prediction estimates wages based on experience and education.

Common Regression Algorithms

Linear Regression assumes a linear relationship between inputs and output and is simple and interpretable. Polynomial Regression fits higher-degree polynomials for non-linear relationships. Support Vector Regression uses advanced techniques for complex, non-linear relationships. Decision Tree Regression splits data into regions assigning the average value of each region. Random Forest Regression combines multiple trees reducing overfitting.

Evaluating Regression Models

Mean Absolute Error shows the average absolute difference. Mean Squared Error penalizes larger errors more heavily. Root Mean Squared Error is in the same units as the target variable. R² Score measures how well the model explains variance.

Understanding Classification

Classification predicts which category an item belongs to. Unlike regression’s continuous values, classification outputs discrete categories or classes.

How Classification Works

Imagine building an email filter. An email must be classified as either “spam” or “legitimate”, nothing in between. Classification algorithms learn to distinguish between predefined categories based on features.

Real-World Classification Applications

Medical Diagnosis classifies tumors as benign or malignant. Fraud Detection identifies fraudulent vs. legitimate transactions. Sentiment Analysis classifies reviews as positive, negative, or neutral. Image Recognition identifies objects, faces, or animals. Spam Detection filters spam from legitimate emails. Customer Categorization segments customers as high-value, medium, or low-value.

Types of Classification

Binary Classification has two classes like spam or not spam. Multi-class Classification has more than two classes like cat, dog, or bird. Multi-label Classification allows instances to belong to multiple classes.

Common Classification Algorithms

Logistic Regression creates probability boundaries between classes and is interpretable for binary classification. Decision Trees recursively split data and are intuitive. Random Forests reduce overfitting by combining multiple trees. Support Vector Machines find optimal hyperplanes separating classes. Naive Bayes applies probability theory and is fast. K-Nearest Neighbors classifies based on nearest neighbors. Neural Networks handle complex patterns.

Evaluating Classification Models

Accuracy shows the percentage of correct predictions. Precision shows of predicted positives how many were actually positive. Recall shows of actual positives how many we caught. F1-Score balances precision and recall. Confusion Matrix shows true positives, true negatives, false positives, and false negatives. ROC Curve shows the trade-off between true positive and false positive rates.

Regression vs. Classification: Key Differences

Regression outputs continuous values, Classification outputs discrete categories. Regression predicts how much, Classification predicts which category.

When to Use Each

Use Regression when your target is continuous, you want exact values, the problem involves forecasting, or you’re doing estimation. Use Classification when your target is categorical, you want to assign categories, the problem involves categorization or detection.

Hybrid Scenarios

You can convert regression output to classes or use classification as intermediate steps for regression.

Conclusion

Regression and classification are fundamental tools in your Machine Learning toolkit. Regression answers how much, Classification answers which category. Ready to explore more algorithms? Check out our guide on Top Machine Learning Algorithms.