Generative AI today is no longer “just ChatGPT”. There is a full family of powerful models like Claude and Google Gemini, each with different strengths, weaknesses and ideal use cases. Choosing the wrong model can waste time and budget, while choosing the right one for each task gives you better quality at lower cost. Recent head‑to‑head comparisons between ChatGPT, Claude and Gemini show that the real differences appear when you look at coding, writing, reasoning, pricing and speed in practical scenarios, not just benchmark numbers.

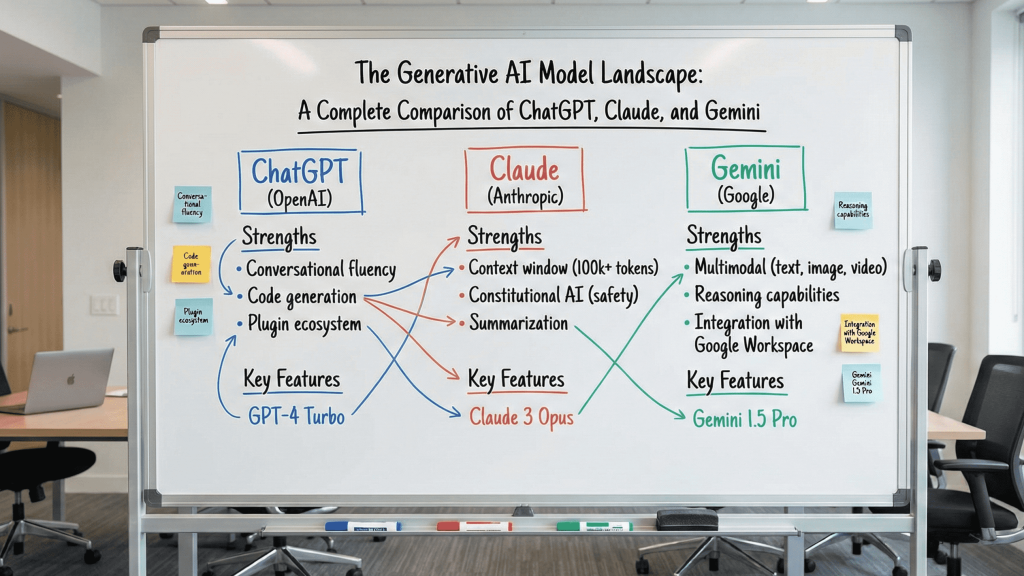

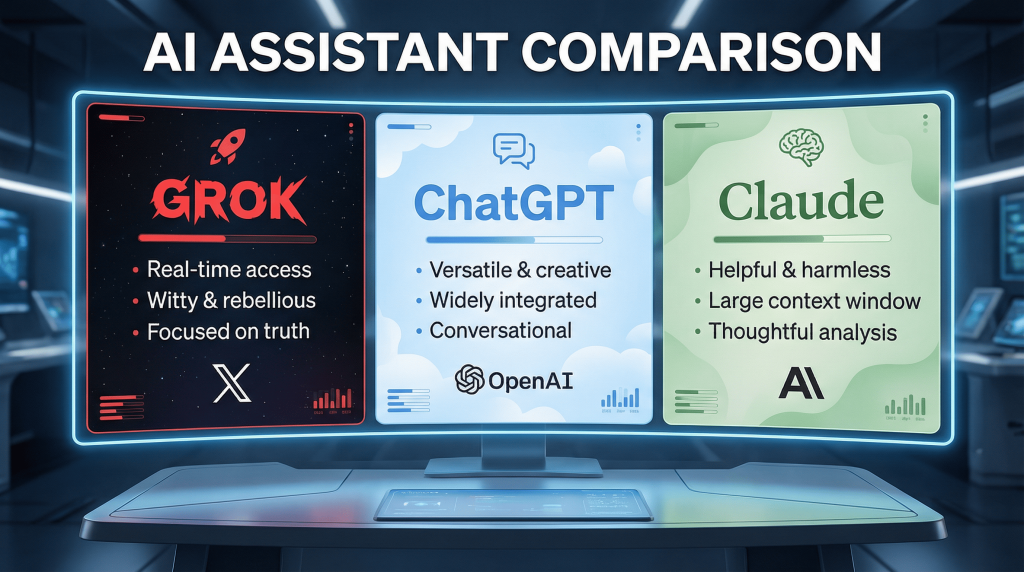

1. Overview of the Main Commercial Models

In 2025–2026, the most important generative models can be grouped into three main families: OpenAI models (such as GPT‑4o), Anthropic models (Claude Sonnet and Opus), and Google models (Gemini 2.x Pro and Flash). These three families dominate most evaluation leaderboards and are the default options in many professional AI tools. Each company focuses on something slightly different: OpenAI on flexibility and tool integrations, Anthropic on safety and careful reasoning, and Google on speed, long context and deep integration with its cloud ecosystem.

2. Coding and Software Development

Standard coding benchmarks show that Claude often leads on difficult programming tasks and automated bug‑fixing, while ChatGPT remains a very strong all‑round coding assistant with the richest third‑party integrations, and Gemini shines when you need to load very large codebases thanks to its massive context window. This means an individual developer working on a typical project can safely default to ChatGPT, while teams dealing with complex systems or huge repositories may prefer Claude for code quality or Gemini for long‑context analysis.

3. Writing, Summarization and Reasoning

For creative and marketing‑style writing, many reviewers find ChatGPT the most “human‑sounding” and easiest to steer in terms of tone of voice. Claude tends to produce more cautious, structured explanations, which makes it well‑suited for sensitive documents and educational material. Gemini performs strongly on summarizing and analyzing very large documents, where you can paste dozens or hundreds of pages at once. For deep logical reasoning and complex STEM problems, specialized reasoning models exist, but they are usually slower and more expensive than general models.

4. Long Context and Multimodality

One of the main differentiators today is context window size. Long‑context Gemini models can accept hundreds of thousands or even a million tokens in a single request, making them ideal for analyzing corporate reports, research collections or huge codebases without splitting them into many chunks. ChatGPT and Claude also support large contexts, but smaller than Gemini’s; they compensate with multi‑turn dialogue patterns that gradually build up understanding. On the multimodal side, all three support text and images to different degrees, while Gemini and ChatGPT currently push ahead more aggressively on video and audio features in certain plans.

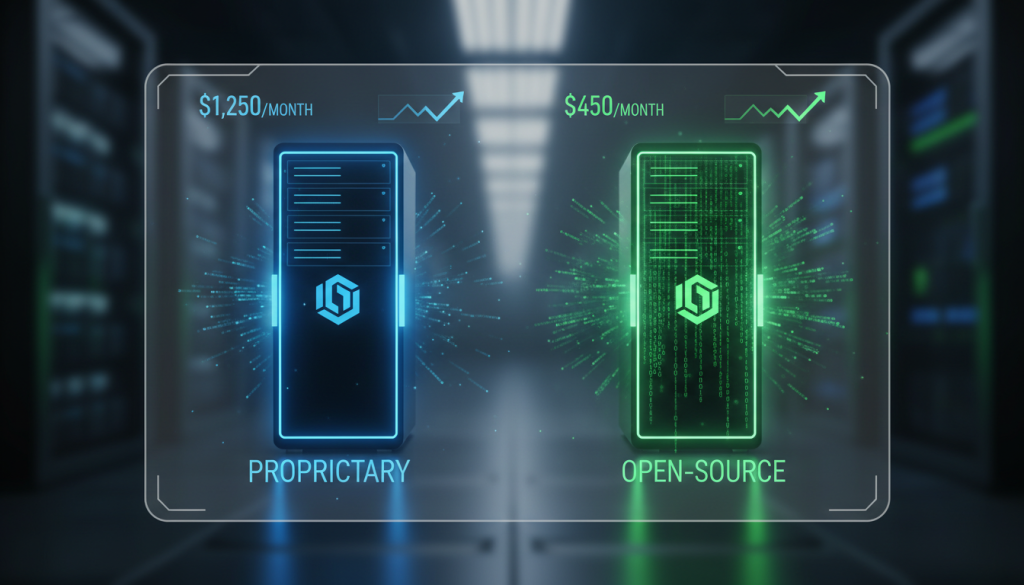

5. Pricing and Real Cost of Use

If you compare raw dollar prices per million tokens, you will see clear differences between providers, but the true picture emerges only when you calculate the cost of an entire project, including how many retries you need and how much human time each model saves. Pricing comparisons show that newer OpenAI models are significantly cheaper than older generations, Anthropic cut Claude prices sharply in late 2025, and Google keeps Gemini very competitive, especially for long‑context workloads. The best choice balances nominal price, number of calls needed and the value of the time it saves your team.