Meta Description: Learn the basics of neural networks: structure, neurons, weights, and how they learn.

Neural Networks are at the heart of modern Artificial Intelligence. Yet their workings often seem mysterious and intimidating. This guide demystifies neural networks, explaining how they work, why they’re effective, and how you can start building them.

The Biological Inspiration

Neural networks are inspired by biological brains. Our brains contain roughly 86 billion neurons, each connected to thousands of others, forming a complex network that enables thought, learning, and action.

Artificial Neural Networks mimic this structure and function at a mathematical level. While simplified compared to biological brains, they capture essential principles enabling powerful learning.

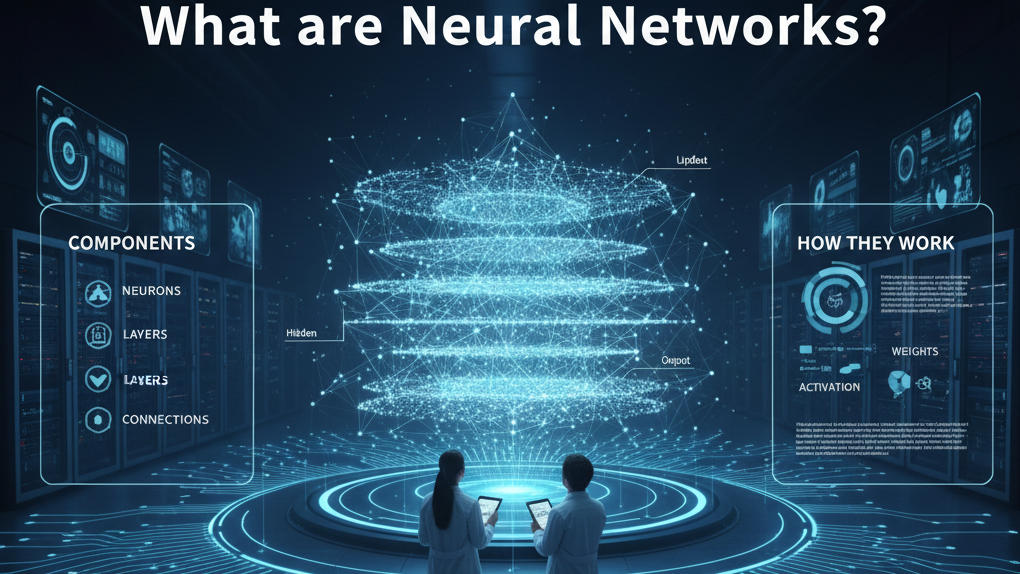

Anatomy of a Neural Network

Neurons

Artificial neurons are mathematical functions. Each receives inputs, combines them using weights, adds a bias, and passes the result through an activation function.

Mathematically: output = activation(sum(inputs × weights) + bias)

Weights and Biases

Weights control how much each input contributes. Biases shift the activation function. Think of them as knobs the network tunes to solve problems.

How Neural Networks Learn: Backpropagation

Training involves three steps. Forward Propagation sends data through layers to produce predictions. Loss Calculation compares predictions to actual values to calculate error. Backpropagation propagates error gradients backward to update weights and minimize error.

Conclusion

Neural networks are powerful function approximators capable of learning complex patterns. Understanding their structure and training process is essential for working with Deep Learning. Explore Convolutional Neural Networks or return to the Deep Learning Pillar for more context.

Continue learning

← Back to Deep Learning and Neural Networks

Next: Convolutional Neural Networks Explained