Meta Description: Understand explainable AI techniques and why transparency in AI decisions matters for trust and compliance.

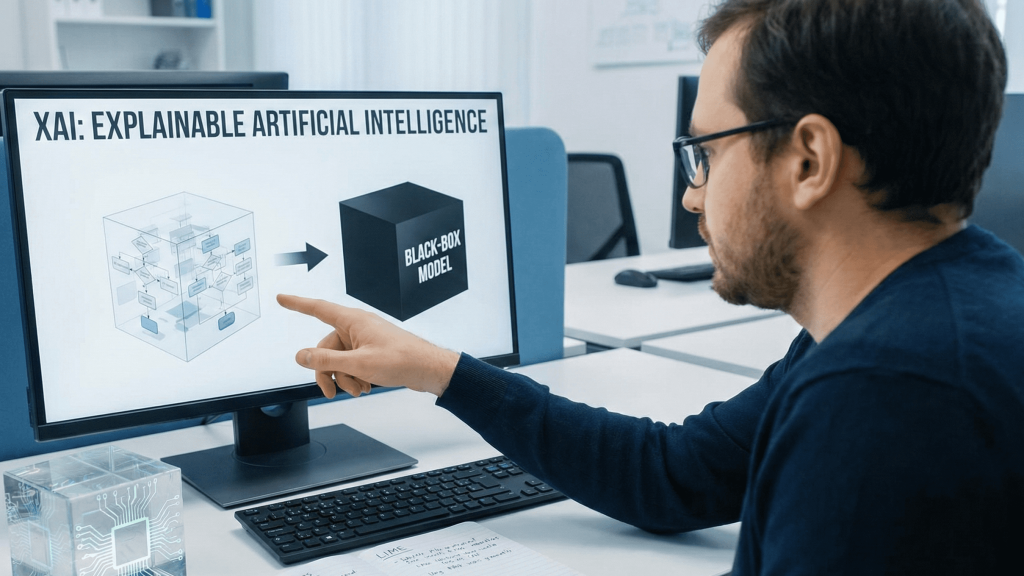

Imagine you’re denied a loan by an AI system. When you ask why, no one can explain the decision. That’s the problem with black-box AI. Explainable AI aims to solve this by making AI decisions transparent, understandable, and defensible.

The Challenge: Black-Box Models

Many powerful AI models, particularly deep neural networks, are extremely complex. They can have millions of parameters and layers that interact in non-obvious ways. The relationship between inputs and outputs becomes difficult or impossible to trace.

This creates problems. People deserve to understand decisions that affect them. Regulators increasingly require explainability. Biased decisions are easier to hide in black boxes. Without understanding how models work, it’s hard to improve them or catch errors.

What Is Explainable AI?

Explainable AI refers to methods and techniques that make the decisions, predictions, and actions of AI systems understandable to human experts and non-experts alike.

This isn’t just about transparency. It’s about making AI decisions trustworthy, debuggable, and defensible. It’s about maintaining human agency and accountability as AI plays an increasingly important role in society.

XAI Techniques

Feature Importance

Feature Importance Analysis identifies which input variables matter most for the model’s predictions. A loan-approval model might show that income and credit score are the most important factors, while age is relatively unimportant.

This helps you understand what the model is using to make decisions and whether those factors are appropriate.

LIME

Local Interpretable Model-agnostic Explanations explains individual predictions by approximating the model locally with an interpretable model.

LIME works by taking a specific prediction, perturbing the input slightly, seeing how the output changes, and fitting a simple model to explain these changes. It’s “local” because it explains one prediction at a time, not the entire model.

SHAP Values

SHAP (SHapley Additive exPlanations) assigns each feature a value representing its contribution to pushing the prediction away from the base value. It’s based on game theory and provides theoretically sound explanations.

SHAP values show exactly how much each feature contributed to a specific prediction and whether it pushed the prediction up or down.

Attention Mechanisms

In neural networks, attention mechanisms highlight which parts of the input the model focused on when making a decision. In image classification, this shows which pixels were important. In text analysis, it shows which words mattered.

Model Distillation

Create a simpler, interpretable model that approximates a complex black-box model’s behavior. You can then analyze the simpler model to understand how the complex model works.

Why Explainability Matters

Trust

People are more likely to trust AI decisions if they can understand them. Explainability builds confidence in AI systems.

Accountability

When AI makes a mistake or causes harm, explainability helps identify what went wrong and who’s responsible.

Regulation

Laws like the EU AI Act increasingly require explainability, particularly for high-stakes decisions. Failing to provide explanations can result in legal consequences.

Debugging and Improvement

Understanding why a model makes certain mistakes helps you fix them. Explainability is essential for model improvement.

Challenges in Explainability

There’s often a trade-off between accuracy and explainability. Simpler, more interpretable models are usually less accurate. Complex models that achieve higher accuracy are harder to explain.

Explaining complex models remains technically challenging. Some techniques, like LIME and SHAP, are computationally expensive. There’s no one-size-fits-all explanation method. Different stakeholders need different types of explanations.

Best Practices for XAI

Choose appropriate techniques for your use case and audience. Combine multiple explanation methods for comprehensive understanding. Validate explanations with domain experts. Document explanations thoroughly. Make explanations actionable and understandable to non-experts.

Conclusion

Explainable AI is becoming increasingly important as AI systems play more critical roles in society. By making AI decisions transparent and understandable, we build trust, ensure accountability, and create better systems.

The goal isn’t to eliminate black-box models. Complex models have their place and often deliver better results. The goal is to ensure that when AI makes important decisions affecting people, those decisions can be understood and justified.

Ready to explore how AI is being regulated? Check out our AI Regulation and Compliance Guide for Businesses next.

Continue learning

← Back to Future of AI and Ethical Considerations

Next: AI Regulation Compliance Guide