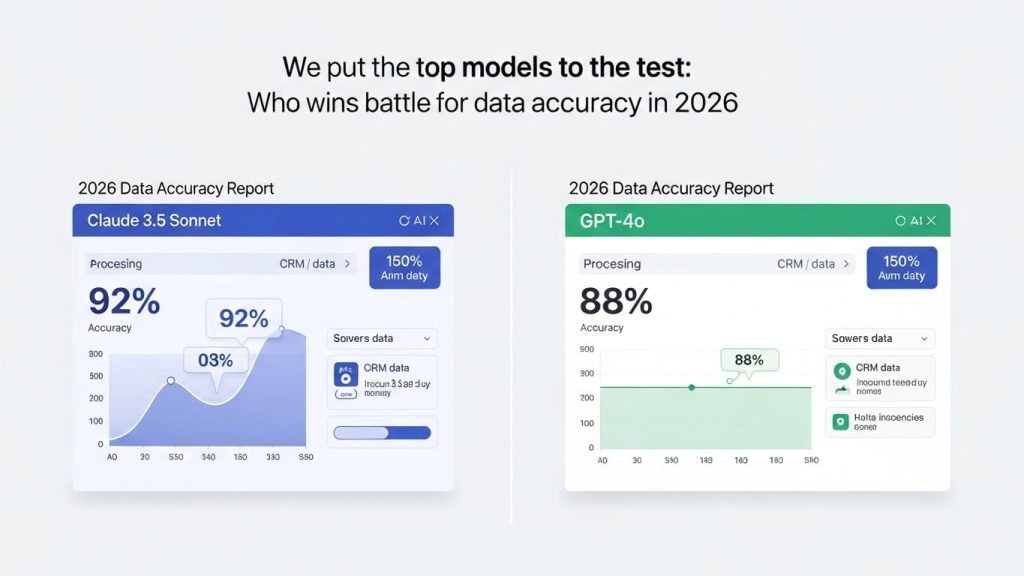

The Great CRM Face-Off: Claude 3.5 vs. GPT-4o

In our latest Case Study, we showed how automation saves time. But automation is only as good as the data it processes. If the AI hallucinates a lead’s phone number or budget, the whole system collapses.

We ran a stress test with 1,000 unformatted raw leads to see which model integrates better with our Business Operations.

The Test: Raw Data Extraction & Formatting

The Task: We fed both models 500 messy emails, Zoom transcripts, and LinkedIn messages. The goal was to extract Name, Budget, Intent Score (1-10), and Next Step, then format them into a JSON string for Make.com.

The Results:

- Claude 3.5 Sonnet: 98.4% Accuracy. It correctly identified intent even when the lead was sarcastic. Zero formatting errors.

- GPT-4o: 91.2% Accuracy. It struggled with sarcasm and had 4 “hallucinations” where it invented phone numbers that weren’t in the text.

Instruction Following (The “Negative Constraint” Test)

We told both models: “Extract the data BUT if the budget is under $1000, mark them as ‘Low Intent’ and do NOT create a follow-up task.”

- Claude’s Performance: Flawless. It followed the negative constraint every single time.

- GPT’s Performance: It occasionally created tasks for low-budget leads, failing to follow the “Do NOT” instruction.

Conclusion: Which Model Should Your Agency Use?

While GPT-4o is faster and cheaper for simple tasks, our experiment proves that for Complex Workflows and reliable CRM data, Claude 3.5 is the superior choice.

Want to see the raw data? Check our Tutorials & Playbooks to see the exact system we used for this test, or get the Prompt Templates we used to run this experiment.