In 2026, we have reached the Point of Indistinguishability. The “Turing Test” is no longer a philosophical milestone; it is a daily obstacle for Google, academic institutions, and every business attempting to protect its brand authority. As AI models like GPT-5 and Claude 4 move toward human-level reasoning, the detection of these outputs has evolved from simple keyword matching to High-Dimensional Pattern Recognition.

To understand AI detection today, one must stop looking at words and start looking at probability distributions. This article provides a comprehensive technical breakdown of how detection works, why it fails, and how to architect content that survives the “Forensic Scrutiny” of the modern web.

I. The Anatomy of a Detector: How Machines Watch Machines

AI detectors in 2026 are not “checkers”; they are Adversarial Classifiers. They operate on the fundamental premise that while an LLM can simulate creativity, it cannot (yet) escape the statistical constraints of its training data.

1. The Perplexity Variable ($P$)

At its core, an LLM is a “next-token predictor.” It calculates the probability of word $B$ following word $A$. Perplexity is a measure of how “surprised” a model is by a sequence of text.

- The Logic: AI tends to stay within the “high-probability” lane to ensure coherence. This results in low perplexity.

- The Human Delta: Humans are erratic. We use non-sequiturs, slang that hasn’t been indexed, and personal anecdotes that break the statistical “ideal.” High perplexity is the hallmark of human entropy.

2. Burstiness and Structural Variance

This refers to the Standard Deviation of sentence length and complexity.

- The AI Signature: Machines often produce a rhythmic, predictable cadence—a “harmonic hum” of sentence structures that feel balanced but sterile.

- The Human Signature: We “burst.” We might follow a 30-word complex sentence with a two-word punch. This variance is incredibly difficult for an AI to replicate without explicit prompting.

3. Logit-Based Watermarking

Some developers (like OpenAI) have experimented with Cryptographic Watermarking. This involves biasing the model to choose specific tokens that form a hidden mathematical pattern. In 2026, sophisticated detectors can scan for these “probabilistic watermarks” even if the text looks perfectly normal to a human eye.

II. The State of the Market: Comparing the “Detection Giants”

The 2026 landscape is divided into those who scan for patterns and those who scan for intent.

| Provider | Technical Engine | Specialized Use Case | Accuracy (2026 Benchmarks) |

| Originality.AI (v4.0) | Multi-Model Ensemble | SEO & Web Publishing | 96.5% on GPT-5 / 91% on Claude |

| GPTZero Enterprise | Deep Linguistic Analysis | Academic Integrity | 98% (with Human-in-the-Loop) |

| Winston AI | OCR + Semantic Scanning | Legal & Formal Documentation | 95% |

| Google Search Console | Behavioral + Vision AI | Content Ranking / Spam Filter | Hidden (Proprietary) |

The “Google Factor”

Google’s detection is the most misunderstood. They don’t just detect if a text is AI; they detect if it is “Helpful.” If your AI-generated article provides unique value, Google’s “Helpful Content System” (HCS) will rank it regardless of its origin. However, if it detects “Mass-Produced Synthetic Spam,” the domain’s E-E-A-T score is permanently throttled.

III. The Vulnerabilities: Why Detection is Never 100%

As an expert, I must be candid: Detection is a probabilistic guess, not a binary truth. There are three major technical “blind spots” in 2026.

1. The “Native Language” Bias

Linguistic classifiers are notoriously biased against non-native English speakers. Because ESL (English as a Second Language) writers often use more formal, structured, and “textbook” grammar, detectors frequently flag their human-written work as AI. This has created a significant ethical crisis in global recruitment and education.

2. The Technical/Legal Paradox

Text that must be precise—such as a medical manual or a legal contract—has naturally low perplexity. There are only so many ways to describe a “Force Majeure” clause. In these niches, AI detectors are virtually useless because the human “ideal” and the AI “ideal” are identical.

3. The Humanization Loop

We are seeing the rise of Adversarial Humanizers. These are secondary AI models (like “Undetectable.ai” evolutions) specifically trained to take AI output and inject “artificial entropy”—intentional errors, varied burstiness, and slang—to bypass the classifiers. It is a cat-and-mouse game where the mouse is getting exponentially smarter.

IV. The Strategy: How to Build “Detection-Proof” Authority

If you are using AI to scale your business (which you should be), your goal isn’t to “trick” the detector—it is to transcend it. Here is the architectural blueprint for content that survives forensic auditing.

1. The “70/30” Hybridization Rule

The most resilient content is generated by AI but structured and “hooked” by humans.

- The AI Role (70%): Data gathering, initial drafting, and structural formatting.

- The Human Role (30%): The introduction (H1/Lede), the personal anecdotes, the unique conclusions, and the final “voice” edit.

- Result: You maintain the speed of AI while providing the “Perplexity Spikes” that signal human authorship.

2. Injecting Proprietary Data (The “Moat”)

Detectors look for “Common Knowledge” patterns. If your article contains a unique case study, a screenshot of your internal n8n workflow, or a quote from a real interview, you break the “Synthetic Pattern.” AI cannot hallucinate a specific, real-world event that happened in your office yesterday.

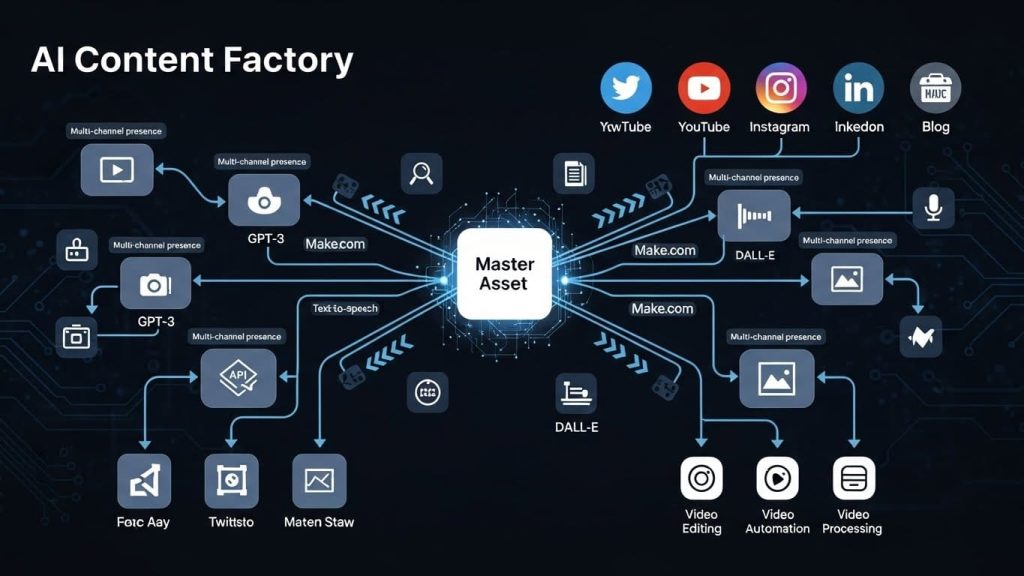

3. The Semantic Shift: Writing for “Multimodal” SEO

In 2026, don’t just write text. Embed original visual data. Our previous guide on Photoshop + n8n automation is crucial here. When Google sees an original, branded infographic that matches the text’s semantic intent, it assigns a high “Originality Score” to the entire page, overriding minor AI flags in the prose.

V. Ethical and Legal Implications: The 2026 Horizon

The legal landscape has caught up. In many jurisdictions, “Synthetic Content” must now be disclosed in the metadata (via C2PA standards).

- The Liability of Hallucination: If your AI-generated medical or financial advice is wrong and was not human-vetted, the legal liability is absolute.

- The Reputation Risk: In 2026, “getting caught” using low-quality AI is the modern equivalent of being caught with a plagiarized thesis. It signals that your brand values Volume over Value.

VI. Architecting Your Internal Detection Policy

Every professional organization must have an “AI Content Governance” policy.

- Mandatory Auditing: Every piece of outward-facing content must be run through at least two detectors (e.g., Originality.AI and GPTZero).

- Threshold Enforcement: Any content scoring above 20% “Probable AI” must be sent back for a “Human Polish” phase.

- Metadata Transparency: Use the “Digital Signature” nodes in your n8n workflows to tag content with its creation method, ensuring future-proof compliance with evolving AI laws.

VII. Conclusion: The Survival of the Authentic

AI Detection in 2026 is not about catching “cheaters”; it is about filtering for quality. The machines are getting better at writing, but they are also getting better at spotting their own kind.

The businesses that will thrive are those that use AI to handle the scale, but keep the soul of their content firmly rooted in human experience, unique data, and strategic intent. Don’t fear the detector—use it as a mirror to ensure that your brand still sounds human.

In the age of the algorithm, Authenticity is the only currency that cannot be devalued.