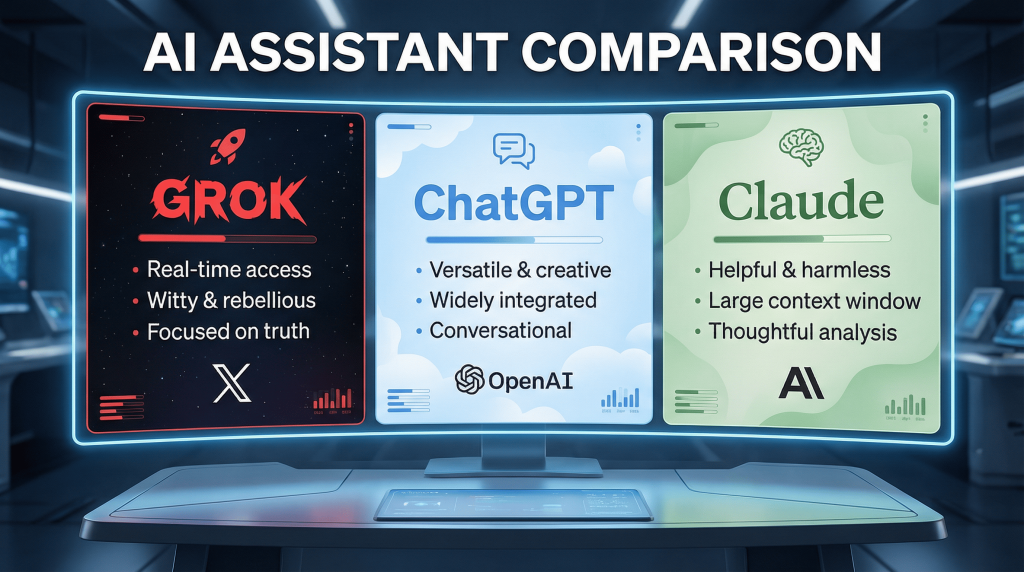

As we move through 2026, a major concern has emerged for developers and enterprises alike: Data Privacy. While cloud-based AIs like Claude or GPT-4 are powerful, sending sensitive proprietary code to external servers is often a violation of security protocols.

Enter Ollama. This tool has become the gold standard for running Large Language Models (LLMs) locally on your own machine. Here is how to set up your private AI coding bunker.

Why Go Local in 2026?

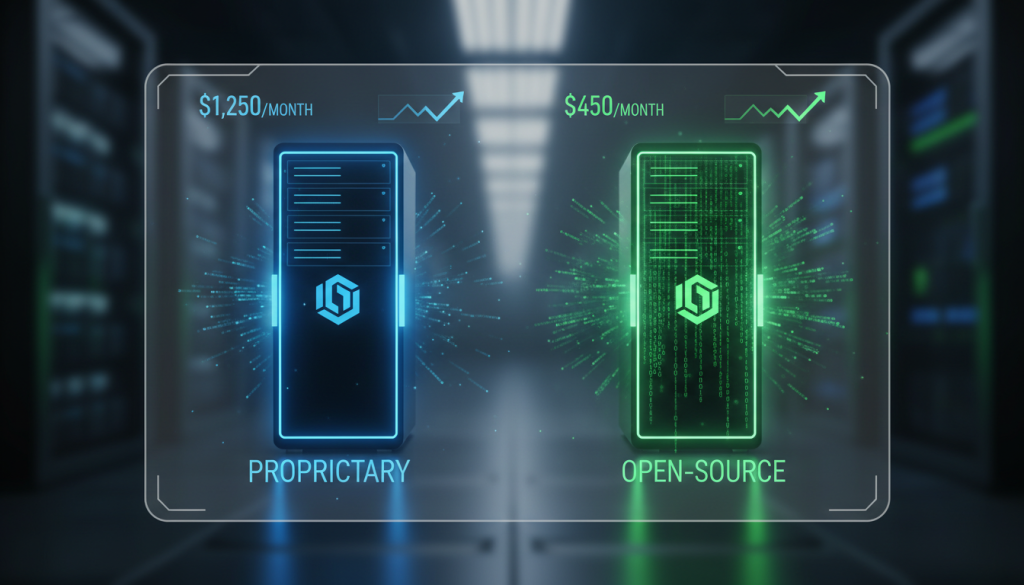

- Absolute Privacy: Your code never leaves your hardware. Zero data is used for training external models.

- Offline Capability: Code on a plane, in a train, or during a network outage without losing your AI assistant.

- Zero Latency: No “round-trip” to a server in Virginia or Dublin. The response starts as fast as your GPU allows.

- Cost-Effective: No monthly subscriptions or API tokens. You only pay for the electricity your PC consumes.

Step 1: Installing the Engine (Ollama)

First, head over to the official site and download the installer for your OS (Mac, Windows, or Linux).

Bash

# For Linux users, a simple curl command does the trick

curl -fsSL https://ollama.com/install.sh | sh

Once installed, Ollama runs as a background service, waiting to serve models to your IDE.

Step 2: Choosing Your “Coding Brain”

In 2026, several local models rival the giants in coding tasks. Here are the top picks to “pull” via your terminal:

- Llama 3.3 (70B or 8B): The all-rounder. Great for general logic.

ollama run llama3.3

- DeepSeek-Coder-V2/V3: Specifically trained on billions of lines of code. It is arguably the best local coding model available right now.

ollama run deepseek-coder-v2

- StarCoder2: Optimized for low-level languages and efficiency.

Step 3: Connecting to Your IDE (The Bridge)

To actually use these models while you code, you need a “bridge” in your editor. The most popular choice in 2026 is Continue.dev.

- Install the Continue extension in VS Code or Cursor.

- Open your

config.jsonin Continue. - Add your local Ollama model to the “models” section:

JSON

{

"title": "Local DeepSeek",

"model": "deepseek-coder-v2",

"apiBase": "http://localhost:11434",

"provider": "ollama"

}

Step 4: Hardware Requirements for 2026

To run these models smoothly, your hardware matters:

- Minimum: 16GB RAM + Apple M-series chip or NVIDIA RTX 3060 (8GB VRAM).

- Recommended: 64GB RAM + Apple M3/M4 Max or NVIDIA RTX 4090.

- Pro Tip: Use quantized models (4-bit or 8-bit) to run large models on consumer-grade hardware without a massive drop in intelligence.

Conclusion

Setting up a local AI stack with Ollama is the ultimate “power move” for a developer in 2026. It combines the cutting-edge intelligence of LLMs with the security of an air-gapped environment. You no longer have to choose between productivity and privacy.

🔗 Internal Linking (SEO)

Next Satellite suggestion: “Now that your environment is secure, check out our guide on Advanced Prompt Engineering for Senior Developers.”

Back to Pillar: “Local AI is a key part of our Ultimate Guide to AI Coding Tools in 2026.”