Meta Description: Explore RNNs, LSTMs, and their role in processing sequential data like text and time series.

While CNNs dominate image processing, Recurrent Neural Networks excel at sequential data where order matters. From translating languages to predicting stock prices, RNNs are essential.

Understanding Sequential Data

Sequential data includes Text where words depend on previous words, Time Series like stock prices and weather, and Audio including speech and music.

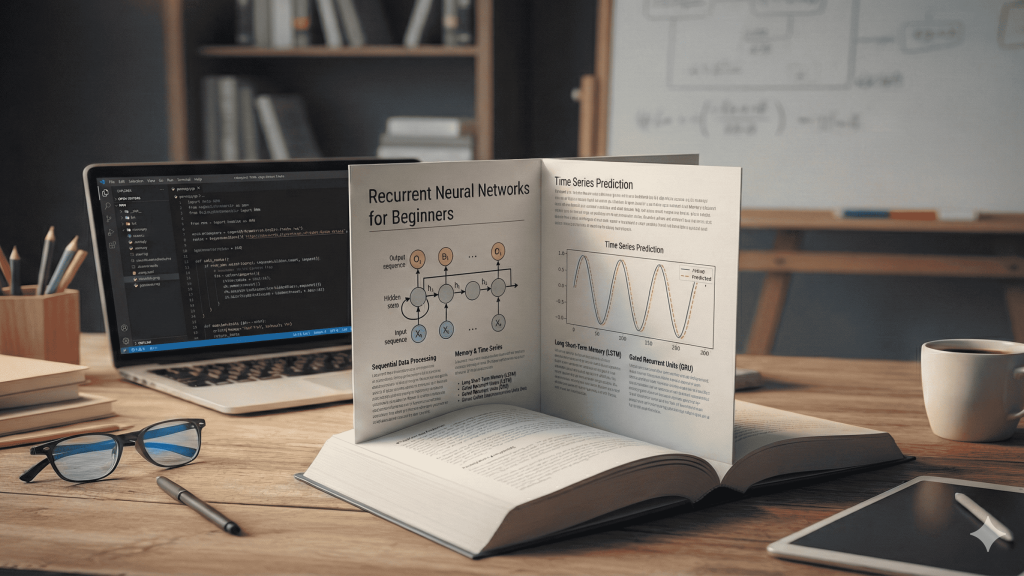

How RNNs Work

RNNs process sequences one element at a time, maintaining a hidden state or memory that captures information from previous steps. This allows them to understand context.

The Problem with Simple RNNs

Simple RNNs struggle with long sequences due to the vanishing gradient problem where they forget early information.

LSTMs and GRUs

Long Short-Term Memory and Gated Recurrent Units solve this by using gates to control what to remember and forget, enabling learning of long-term dependencies.

Applications

Machine Translation translates text between languages. Speech Recognition converts audio to text. Sentiment Analysis determines emotion in text. Text Generation writes creative content.

Conclusion

RNNs and variants are fundamental for any task involving sequences. They bring memory and context to AI models. Return to Deep Learning and Neural Networks to see the big picture.

Continue learning

← Back to Deep Learning and Neural Networks

Next: AI Applications Across Industries