The Modular AI Stack: Engineering High-Velocity Solo Infrastructure

The era of the monolithic SaaS subscription is dead. For the high-performance solo founder, success in 2026 relies on composability.

Relying on a single walled garden (like the standard ChatGPT interface or a generic CRM) is a critical point of failure. It limits throughput, caps automation potential, and creates vendor lock-in. To build a Sovereign Solo Enterprise, you must architect a Modular AI Stack—a decoupling of intelligence, memory, orchestration, and execution layers.

This technical review analyzes the current best-in-class infrastructure components required to build a system that scales linearly with your ambition, not your headcount.

1. The Inference Layer: Diversifying Intelligence

The “brain” of your stack cannot be a single provider. Operational resilience requires model agnosticism. You need to route tasks based on cost, speed, and reasoning capability.

The Triad of Inference

Complex Reasoning (The Architect): OpenAI GPT-4o or Anthropic Claude 3.5 Sonnet. These models handle high-level strategy, coding, and nuance. They are expensive but necessary for quality assurance.

High-Velocity Execution (The Worker): Groq (Llama 3). For real-time applications—like voice agents or instant data classification—latency kills conversion. Groq offers near-instantaneous token generation ($0.08/1M tokens) that creates a “human” feel in automated interactions.

Privacy & Local Ops (The Vault): Ollama (Mistral/Llama). For sensitive financial data or proprietary IP, running local inference ensures zero data leakage. This is crucial for maintaining the integrity discussed in our Capital Efficiency Guide.

Pro Tip: Use an LLM router (like OpenRouter or a custom Python script) to dynamically switch models. If the API latency of Provider A spikes, the system automatically fails over to Provider B.

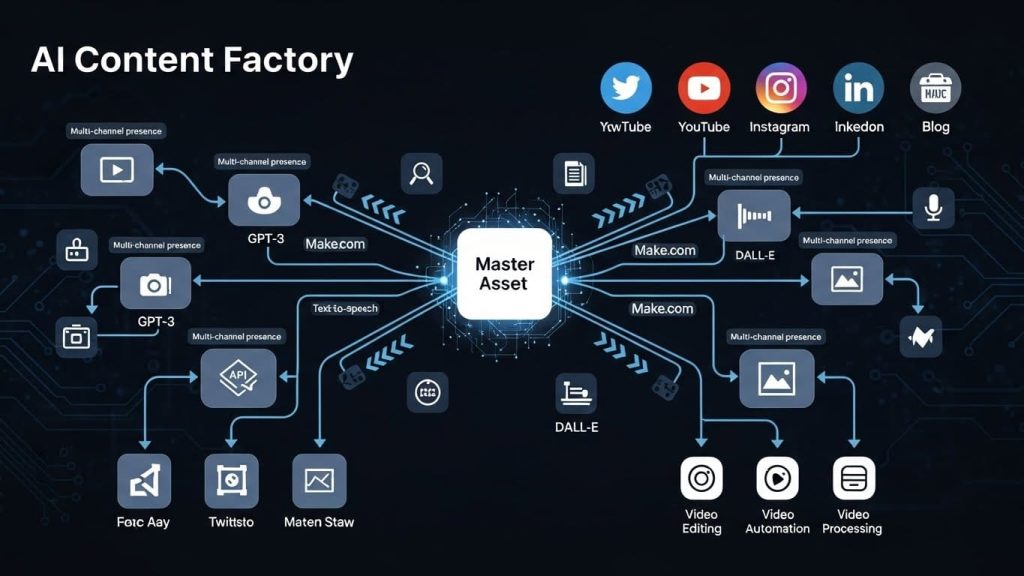

2. The Orchestration Layer: The Nervous System

Models are useless without connection. The orchestration layer dictates how data flows between your intelligence and your applications. This is where Agentic Workflows live.

n8n (Self-Hosted) vs. Make (SaaS)

While Make.com remains the standard for quick visual prototyping, n8n is the superior choice for the technical solo founder.

| Feature | Make (Enterprise) | n8n (Self-Hosted) |

| :— | :— | :— |

| Cost Scaling | High (Per Operation) | Flat (Server Cost) |

| Data Privacy | Cloud-Based | 100% Sovereign |

| Python/JS Exec| Limited | Full Support |

| Verdict | Good for MVP | Essential for Scaling |

Recommendation: Deploy n8n on a DigitalOcean droplet. This grants you unlimited workflow executions for a fixed cost (~$20/mo), preventing the “success tax” that occurs when high-volume automations (like an Autonomic Outbound Engine) ramp up.

3. The Memory Layer: Vector Databases (RAG)

Standard LLMs hallucinate because they lack context. To build a business that understands *your* specific customers and SOPs, you need Retrieval-Augmented Generation (RAG).

Pinecone vs. Weaviate

Pinecone: Serverless, easy to implement, handles massive scale with zero maintenance. Best for founders who want to “set and forget.”

Weaviate: Open-source and customizable. Allows for hybrid search (keyword + vector), which often yields better retrieval results for technical documentation or legal contracts.

The Stack Implementation:

Connect your Knowledge Base (Notion/Obsidian) → n8n Webhook → OpenAI Embedding Model → Pinecone.

This loop ensures that every time you update a strategy document, your AI agents instantly have access to the new protocol.

4. The Interface Layer: Headless vs. UI

Finally, how do you interact with your stack?

FlowiseAI / LangFlow: These are low-code drag-and-drop builders specifically for LLM apps. They allow you to build custom chatbots (e.g., “Internal Legal Advisor” or “Customer Support Bot”) that utilize your specific RAG database.

Streamlit (Python): For pure data visualization and dashboarding. If you need to visualize the ROI of your Capital Efficiency strategies, a custom Streamlit app connected to your database is unbeatable.

Summary: The Ideal 2026 Solo Stack

If you are building the Sovereign Solo Enterprise today, this is the blueprint for maximum leverage:

1. Orchestrator: n8n (Self-hosted)

2. Intelligence: OpenRouter (GPT-4o + Claude 3.5 + Llama 3 on Groq)

3. Memory: Pinecone (Serverless)

4. Interface: FlowiseAI (for internal tools)

5. Integration: Custom Webhooks to Slack/Discord for “Human-in-the-loop” notifications.

This modular approach ensures that when the next breakthrough model drops, you simply swap an API key—you don’t rebuild your entire business.

Alex’s Final Verdict

The ‘All-in-One’ AI platforms are a trap for the sophisticated operator. They offer convenience at the cost of control and margin. By adopting a modular stack (n8n + Vector DB + API Routing), you build an asset that is transferrable, scalable, and significantly cheaper at volume. Start with n8n self-hosting; it is the highest leverage technical decision you will make this quarter.