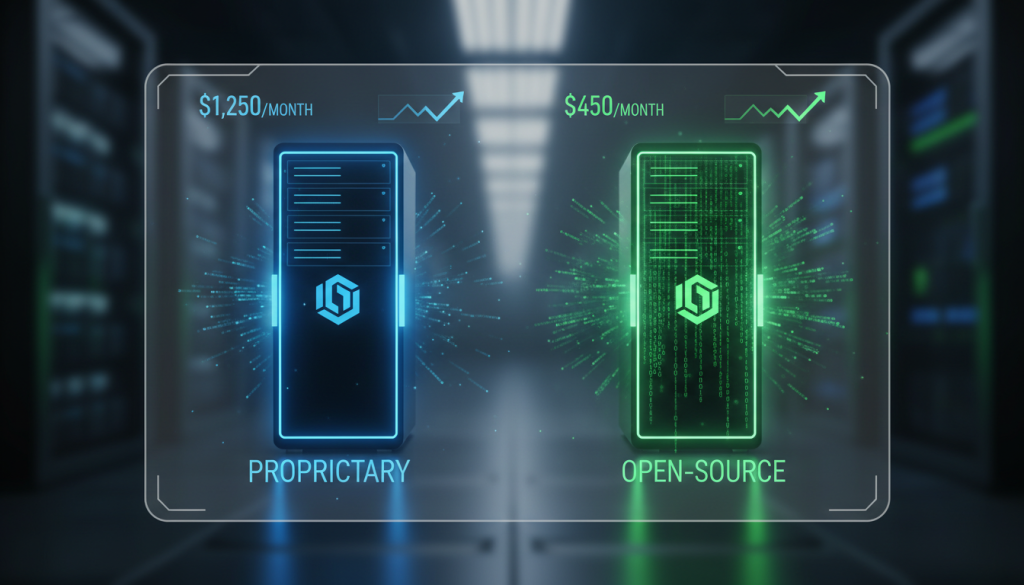

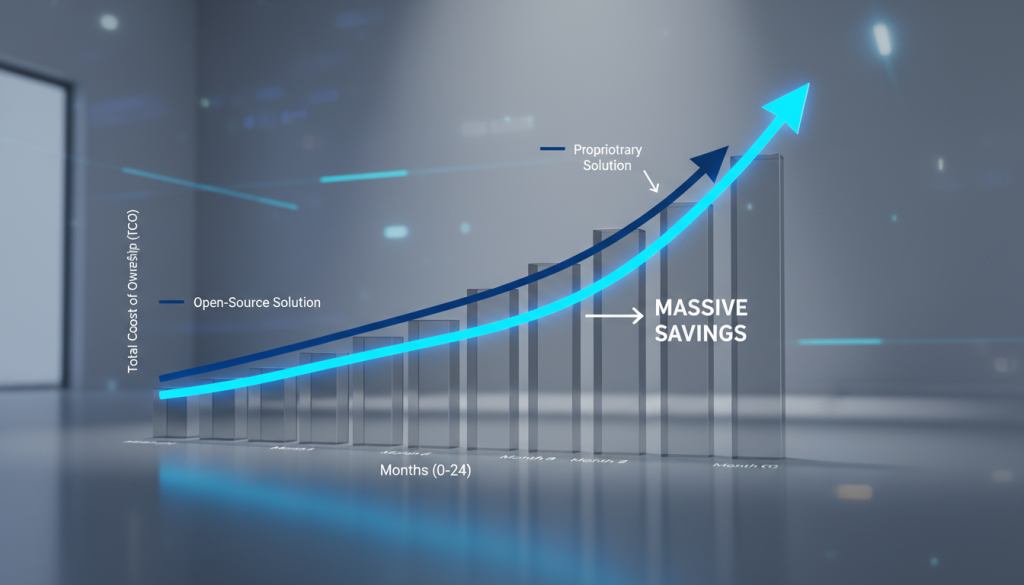

Winfield’s Take: By 2026, the “Intelligence Premium” has effectively collapsed. The decision between proprietary and open-source orchestration is no longer about model capability—it’s about unit economics. For high-volume B2B operations, proprietary orchestration is a “convenience tax” that eats 40% of your gross margin. Open-source is no longer a hobbyist choice; it is the standard for enterprise-grade scalability and data sovereignty.

So What?

Optimizing your orchestration layer allows you to reallocate six to seven figures of annual B2B AI infra spend toward R&D or customer acquisition. Transitioning from a closed-loop system (like OpenAI’s Assistants API) to a self-hosted stack (vLLM + LangGraph) reduces latency by 30% and cuts token costs by up to 85% at scale.

Open-Source vs Proprietary LLM Orchestration: A 2026 Cost-Benefit Analysis

As we move into 2026, the enterprise AI landscape has bifurcated. We have moved past the “magic” phase of AI and into the “industrialization” phase. For B2B growth engines, the orchestration layer—the logic that connects models to tools, memory, and data—is where the real cost battle is won or lost.

The Proprietary Trap: The “Easy” Tax

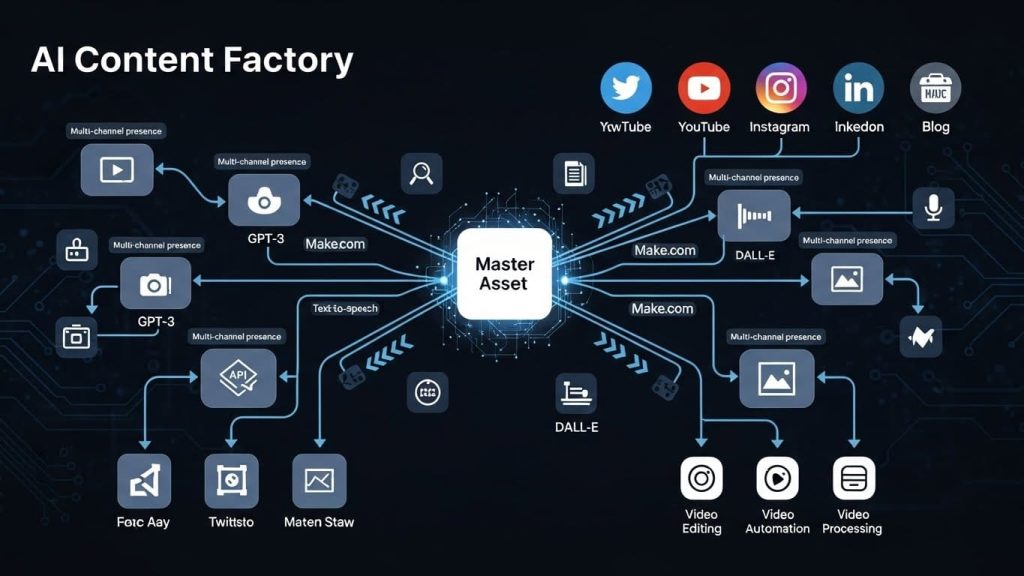

Proprietary orchestration (e.g., OpenAI, Anthropic, or Azure AI Studio) offers the fastest time-to-market. For startups or small-scale pilots using tools like Make.com to bridge basic workflows, this is the logical starting point.

The Benefits

* Zero Infra Overhead: No need to manage Kubernetes clusters or GPU availability.

* Integrated Security: SOC2 and HIPAA compliance are often baked into the platform.

* Superior Tool Use: Proprietary models still hold a slight edge in complex function calling and multi-step reasoning.

The 2026 Reality

The “Easy” tax is real. As your volume hits 100M+ tokens per month, the per-token markup on proprietary orchestration becomes unsustainable. You are paying for their R&D, not just your compute.

Open-Source Orchestration: The Margin Play

Self-hosted AI models and orchestration frameworks (LangChain, LlamaIndex, Haystack) have reached parity with proprietary systems in 90% of B2B use cases—specifically in data enrichment and lead scoring.

The TCO (Total Cost of Ownership) Breakdown

In 2026, the enterprise LLM comparison favors open-source for high-frequency, low-latency tasks.

| Metric | Proprietary (API-based) | Open-Source (Self-Hosted) |

| :— | :— | :— |

| Setup Cost | Low ($0 – $1k) | High ($10k – $50k) |

| Unit Cost (per 1k tokens) | $0.01 – $0.10 | $0.001 – $0.005 |

| Data Privacy | Third-party dependent | Total Sovereignty |

| Customization | Limited (Fine-tuning only) | Full (Adapter, LoRA, Quantization) |

Integrating with the Growth Stack

Modern B2B growth relies on the orchestration of data between platforms. Whether you use Clay** for hyper-personalized outbound or **Instantly for cold email automation, the backend orchestration dictates your profitability.

1. Data Enrichment: Use open-source models (like Llama 3.4 or Mistral-Next) for high-volume scraping and categorization.

2. Workflow Automation:** Use **Make.com as the “glue” but point the API calls to your self-hosted vLLM endpoint rather than a proprietary API.

3. Lead Scoring: High-volume scoring is 70% cheaper when run on your own H100/B200 instances.

The Hybrid Strategy: The 2026 Gold Standard

The most profitable B2B firms don’t choose one; they use a “Router Architecture.”

* Tier 1 (Complex Reasoning): Send to proprietary models (GPT-5 or Claude 4).

* Tier 2 (Repetitive Tasks): Route to self-hosted open-source models.

This approach minimizes LLM orchestration costs while maintaining the highest possible output quality.

Actionable Tools for Transition

* vLLM: For high-throughput serving of open-source models.

* LangGraph: For building complex, stateful agents that don’t lock you into a single provider.

* Clay: For feeding refined data into your orchestration layer.

* Helicone: For observability and cost tracking across both proprietary and open-source stacks.

Internal Linking & Further Reading

To further optimize your growth infrastructure, check out our guide on `scaling-b2b-growth-engines` and our deep dive into `ai-infrastructure-roi`.

The bottom line: In 2026, if you are still 100% proprietary, you aren’t an AI company; you’re a reseller with shrinking margins. Move your high-volume orchestration to open-source or prepare to be outpriced by competitors who did.